Computing cluster

Brief description

The computing system cluster consists of physical servers. Supplemented by a remote cloud infrastructure, is built on the principle of high availability and fault tolerance. This architecture satisfies technical needs and is scalable both vertically and horizontally.

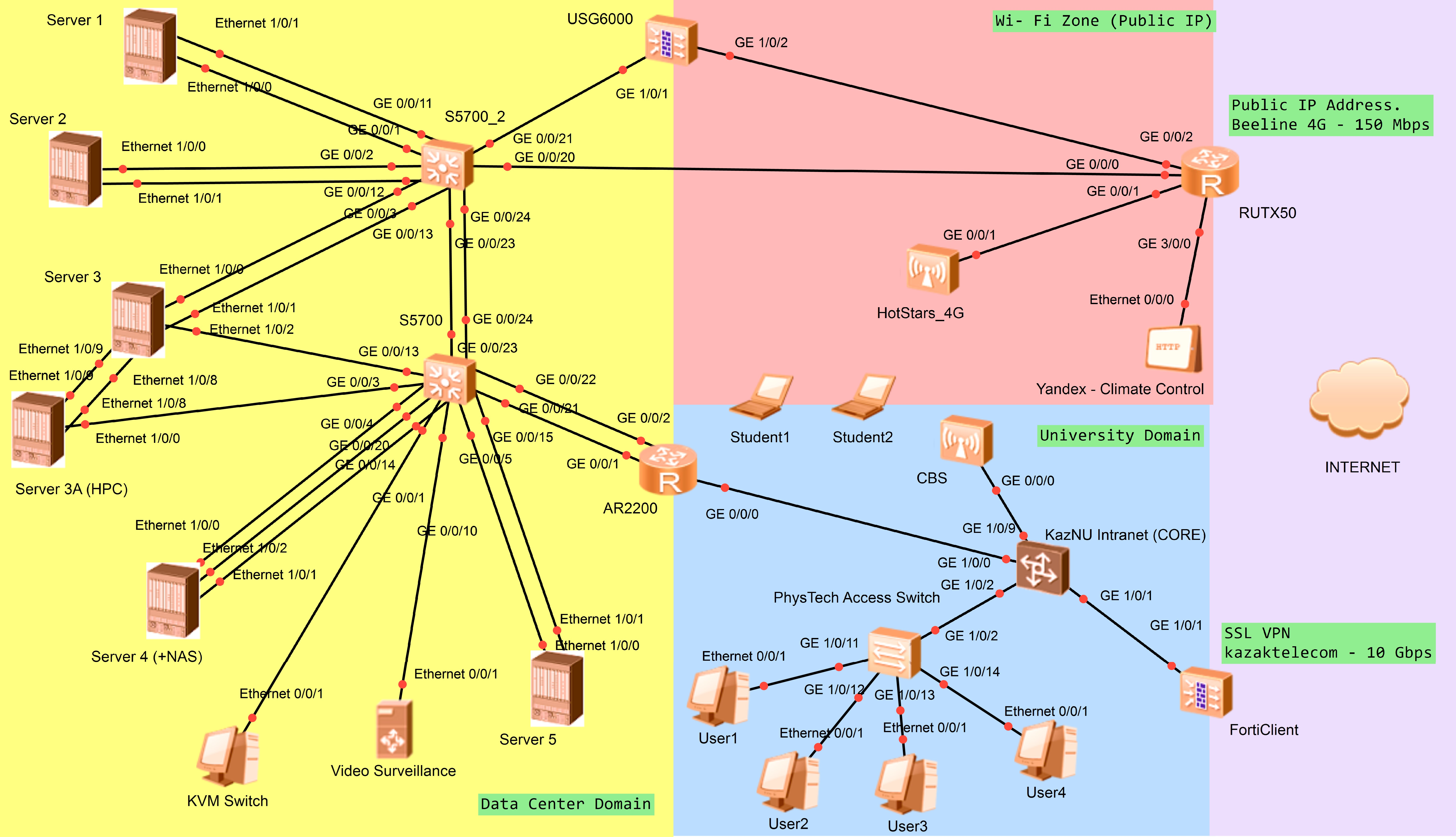

The flexibility and modularity of the cluster structure is ensured by a unified control system through distributed power and access systems. In this way, full functionality of computing nodes is achieved 24/7, backup, migration, data storage and access with the possibility of “hot” replacement and deployment as exacting systems so as entire nodes. The access system is built through a main 1Gbps channel using a secure SSL VPN topology. A backup 4G radio channel with a speed of 50Mbps is deployed through a Firewall with an external, public IP address. The cloud part is deployed on rented virtual hosting (Microsoft OneDrive) to support data distribution and access. Computing node is a set of virtual machines on a common hypervisor platform (in particular, HYPER-V, in some moments ESXi) built above of shared resources, distributed across physical servers (Xeon processors, RAM and hard drives in a RAID array).

Power is provided by independent power supplies and a backup system. Power management system support a remote access feature. The machine-to-machine connection within the domain is built on 10Gbps interfaces. The network infrastructure is built through link aggregation and connection redundancy. Broadcast VLAN domains separates the power management system with monitoring sensor system, management subnet and access subnet.

We use our own Linux-shell based script to parallelizing calculations.

Cluster hardware consists of 6 HP Enterprise DL380 Gen10 / Gen10 Plus units

Server Table

| Server | Processor | Comp. resource | Memory | Storage | Management /24 and Access IP |

|---|---|---|---|---|---|

| S1 | Gen10+ 2 x Xeon Gold 6330 |

2.00 GHz, 56 cores / 112 threads |

4 x 32 Gb DDR4-2933 |

3 x 960Gb 2.62 Tb |

192.168.1.71 192.168.0.71 |

| S2 | Gen10+ 2 x Xeon Gold 6348 |

2.60 GHz, 56 cores / 112 threads |

8 x 32 Gb DDR4-3200 |

8 x 960Gb 3.49 Tb (RAID 60) |

192.168.1.72 192.168.0.72 |

| S3 | Gen10 2 x Xeon Gold 6248R |

3.00 GHz, 48 cores / 96 threads |

4 x 32 Gb DDR4-2933 |

480 + 1.92 + 2 x 960 |

192.168.1.73 192.168.1.173 192.168.0.73 |

| S3a | Gen10 2 x Xeon Gold 6248R |

3.00 GHz, 48 cores / 96 threads |

4 x 32 Gb DDR4-2933 |

2 x 960 Gb | 192.168.1.77 192.168.0.77 |

| S4 | Gen10 2 x Xeon Gold 5218R |

2.10 GHz, 40 cores / 80 threads |

2 x 32 Gb DDR4-2666 |

3 x 480 + 4 x 960 Gb |

192.168.1.74 192.168.1.174 192.168.0.74 |

| S5 | Gen10 1 x Xeon Platinum 8180 |

2.50 GHz, 28 cores / 56 threads |

2 x 32 Gb DDR4-2666 |

1 x 960 Gb | 192.168.1.75 192.168.0.75 |

Virtual Machines

| Server | Virtual Machines | Comp. Resources CPU / Memory / Storage |

IP Address and Network Capability |

External and Internal NAT ports |

|---|---|---|---|---|

| S1 | Ubuntu18_MHD | 8 th./ 6-8 Gb / 420 Gb | 192.168.0.211 / 10 Gbit/s (shared) |

-- -- |

| Ubuntu18_CVLab | 8 th. / 108 Gb / 127 Gb | 192.168.0.212 / 10 Gbit/s (shared) |

22212 (SSH) -- |

|

| Ubuntu22_MESA | 96 th. / 4-8 Gb / 228 Gb | 192.168.0.213 / 10 Gbit/s (shared) |

-- 33813 (RDP) / 22213 (SSH) |

|

| S2 | Ubuntu18_SPH | 8 th. / 16 Gb / 320 Gb | 192.168.0.221 / 10 Gbit/s (shared) |

-- 33811 (RDP) |

| Ubuntu18_Hdust | 104 th. / 224 Gb / 420 Gb | 192.168.0.222 / 10 Gbit/s (shared) |

8440 (SSH) -- |

|

| S3 | Ubuntu18_SLURM_M | 8 th. / 16 Gb / 320 Gb | 192.168.0.231 / 40 Gbit/s (direct) |

-- -- |

| Ubuntu18_LVCL | 40 th. / 60 Gb / 0.8+1.7 Tb | 192.168.0.232 / 1 Gbit/s (shared) |

3399 (RDP) -- |

|

| Ubuntu18_Phantom | 56 th. / 56 Gb / 228 Gb | 192.168.0.233 / 1 Gbit/s (shared) |

-- 33833 (RDP) / 22233 (SSH) |

|

| S3a | Ubuntu18_SLURM_C | 112 th. / 128 Gb / 228 Gb | 192.168.0.237 / 40 Gbit/s (direct) |

-- -- |

| S4 | Ubuntu16_IRAFv2.16 | 16 th. / 8 Gb / 420 Gb | 192.168.0.241 / 1 Gbit/s (shared) |

-- -- |

| Ubuntu18_IRAFv2.18 | 16 th. / 8 Gb / 420 Gb | 192.168.0.242 / 1 Gbit/s (shared) |

-- -- |

|

| Ubuntu18_Students | 16 th. / 16 Gb / 920 Gb | 192.168.0.243 / 1 Gbit/s (shared) |

-- -- |

|

| Ubuntu22_2024_v1 | 16 th. / 8 Gb / 240 Gb | 192.168.0.244 / 1 Gbit/s (shared) |

-- 33844 (RDP) / 22244 (SSH) |

|

| Windows11_RDP | 8 th. / 8 Gb / 240 Gb | 192.168.0.249 / 1 Gbit/s (shared) |

8391 (RDP) -- |

|

| Windows10_OneDrive | 8 th. / 16 Gb / 1.7 Tb | 192.168.0.200 / 1 Gbit/s (shared) |

8442 (RDP) -- |

|

| S5 | Windows11_TensorFlow | 56 th. / 64 Gb / 960 Gb + 2 Tb SATA / GPU Nvidia A2 |

192.168.0.251 / 1 Gbit/s (direct) |

-- -- |

HP Enterprise ProLiant DL380 Gen10 Plus (support PCIe 4.0), 2U Rack

- Processor:112 threads 2.60 GHz, (max. 3.5 GHz) 2x Intel Xeon Gold 6330, 42M Cache, 28 cores, 56 threads each.

- GPU: 1xNvidia A2

- RAM: 128 GB, 4x32GB, DDR4-3200MHz.

- Storage: 2.8 TB, 3x960GB SSD on 8xSFF bay with MR416i-p NVMe/SAS 12G hardware RAID controller.

- Network: 2x10GE (SFP+, DAC, access), 1x1GE (management).

- Power supply: 1x1600W and 1x800W (backup power).

Computing Resources

In total, the following resources are available:- 464 threads with 2.6 GHz (on 10 physical CPUs, Xeon Gold 62xx/63xx series processors)

- 1 GPU (Nvidia A2)

- 576 GB Memory (DDR4, 2666 MHz)

- 23 TB Storage (SSD / SAS drives on hardware RAID)

- Cluster hardware consists of 4 HP Enterprise DL380 Gen10 / Gen10 Plus units.

Access capabilities

- SSH and RDP (VNC on demand) via Public IP Address

- Access to “user data” is also possible through the Microsoft Сloud (1 TB, type of file hosting service) via Shared links.

Software and hardware topology

- Host OS based on Linux (Ubuntu 18.04 LTS)

- Virtualization on Hyper-V Server 2019

- Internet connection (Main up-link, Access LAN) interface type is 1 Gigabit Ethernet (1 GE, the best measured ping about to 2-5 ms and speed is 750 Mbpc, according to speedtest.net service)

- Reserved 4G cellular network is available (50 Mbps, it is also used for Public IP Access)

- Main power supply 6.5 kW (Backup power 2.2 kW)

- Inter cluster communication, via 10GBASE (SFP+, DAC)

- Management VLAN network based on 1 GE interfaces with remote access via Forti Gate SSL VPN.

- Firewalls (NGFW), system monitoring tools, routing and switching features such as link aggregation and connection redundancy are implemented.

- Scalability is possible by both vertically and horizontally.

- OS workload checkpoints

- Backup and migration for OS

- Cloning and imaging with current configurations and built-in applications.

- Fast deployment features for OS and computing nodes

- Hot-swappable hardware components of serves in the cluster

O&M (operations and maintenance) features